Lyrical Particles is a music-lyrics visualisation system that accepts two pieces of input: a music file, and a timed representation of the lyrics in the form of a CSV file.

Both the waveform and the beat information are extracted from the music file and are mapped into aspects of the main particle systems in display.

[sr_video][/sr_video]

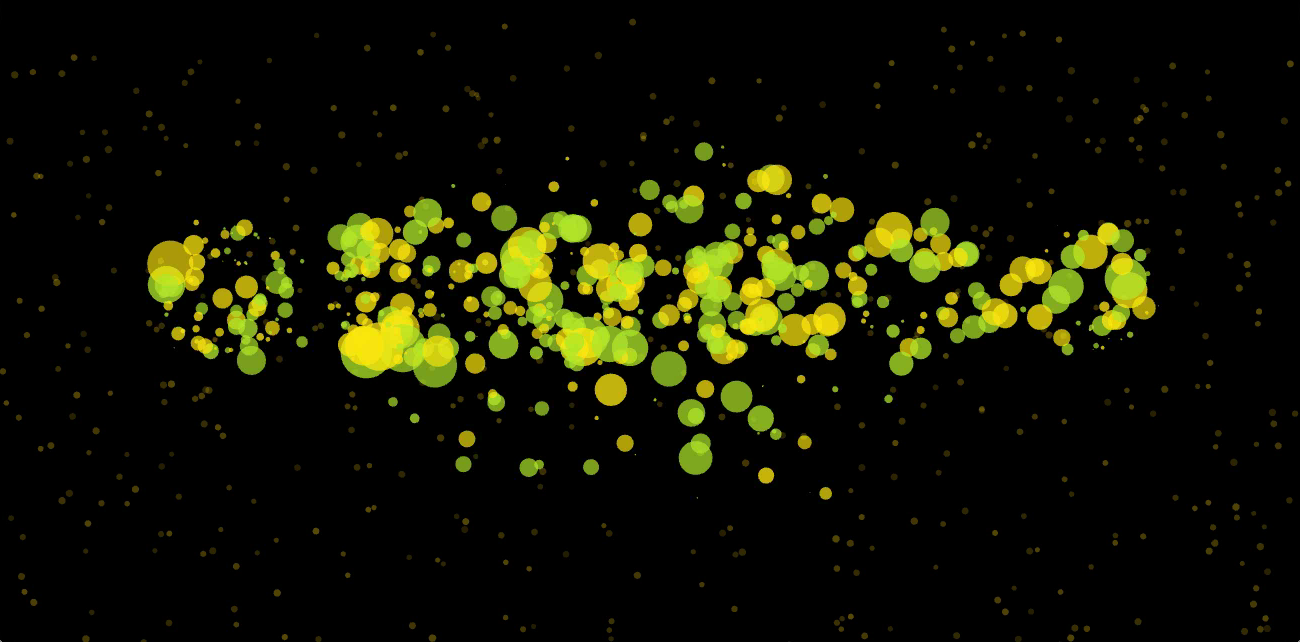

The size of the particles in the main particle system corresponds to the amplitude of a sample of the waveform at that instance. The size of the particles in the distant, background particle system is controlled by the beat of the song, while the rate of their movement (once they do start moving) also corresponds to the waveform of the song.

The main particle system serves another function: it is a visualizer of the lyrics of the song. According to the provided CSV file in which the lyrics and their timestamps are specified, the particles in said particle system self-arrange to display portion of the lyrics at that instant of time.

To expand the system’s capabilities further, I plan to add, if time allows, the functionality of identifying the song based on its basic characteristics, connecting to the internet to grab its lyrics, and determining the timing of each portion of the lyrics based on voice recognition algorithms. This effectively eliminates the need for the secondary CSV lyrics file in the current implementation.

Another direction I wish to explore is adding an interactive layer by recognizing the speech of the viewer and consequently visualizing it through the main particle system in display.

Leave a Reply to fwowejioflas Cancel reply